After discussing about Docker installation, now we will continue with Docker Swarm.

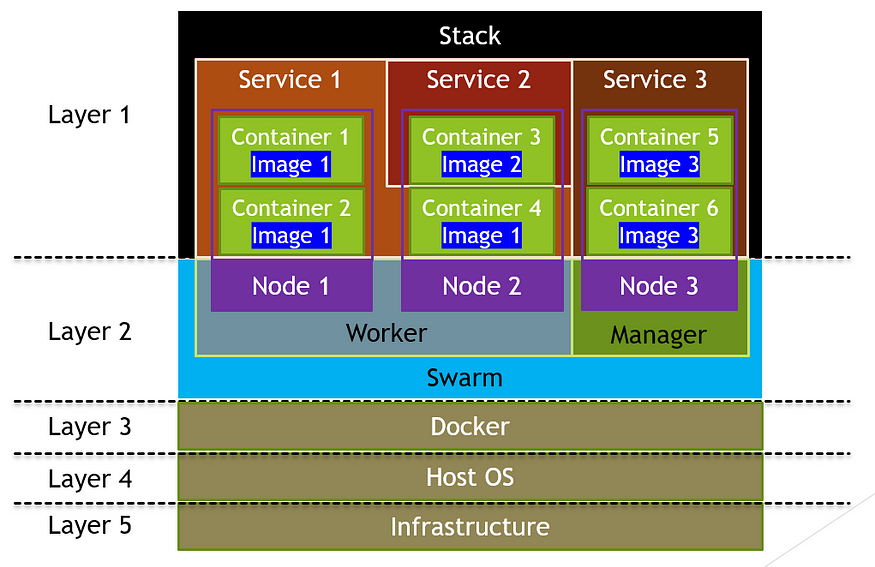

A swarm consists of multiple Docker hosts which run in swarm mode and act as managers (to manage membership and delegation) and workers (which run swarm services). A given Docker host can be a manager, a worker, or perform both roles.

One of the key advantages of swarm services over standalone containers is that you can modify a service’s configuration, including the networks and volumes it is connected to, without the need to manually restart the service.

When Docker is running in swarm mode, you can still run standalone containers on any of the Docker hosts participating in the swarm, as well as swarm services. A key difference between standalone containers and swarm services is that only swarm managers can manage a swarm, while standalone containers can be started on any daemon. Docker daemons can participate in a swarm as managers, workers, or both.

Port used by swarm

- TCP port 2377 for cluster management communications

- TCP and UDP port 7946 for communication among nodes

- UDP port 4789 for overlay network traffic

Running Swarm

Running swarm on single node (manager)

docker swarm init Running Swarm on multiple node

Check the ip address by issuing ifconfig command

pi@prod:~ $ ifconfig eth0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 ether dc:a6:32:fa:3c:16 txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

docker swarm init --advertise-addr 192.168.100.100The output shall be

Swarm initialized: current node (3bqgliridg309s4htybzuf5av) is now a manager. To add a worker to this swarm, run the following command: docker swarm join --token SWMTKN-1-1e72di2xylqk821ha3t4crnv6revkwqelj799nyqeixsjchvsz-2aq3aynkz8ty22ggljqljvqdm 192.168.100.100:2377 To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

Run swarm from worker

ubuntu@bw:~$ docker swarm join --token SWMTKN-1-1e72di2xylqk821ha3t4crnv6revkwqelj799nyqeixsjchvsz-2aq3aynkz8ty22ggljqljvqdm 192.168.100.100:2377 This node joined a swarm as a worker.

To verify

pi@prod:~ $ docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION x6y5c250vwkxtkq2m22tecn9j bw.iolib.link Ready Active 20.10.7 3bqgliridg309s4htybzuf5av * prod Ready Active Leader 20.10.12

Nodes

A node is an instance of the Docker engine participating in the swarm. You can also think of this as a Docker node. You can run one or more nodes on a single physical computer or cloud server, but production swarm deployments typically include Docker nodes distributed across multiple physical and cloud machines.

Service and Task

A service is the definition of the tasks to execute on the manager or worker nodes. It is the central structure of the swarm system and the primary root of user interaction with the swarm.

When you create a service, you specify which container image to use and which commands to execute inside running containers.

In the replicated services model, the swarm manager distributes a specific number of replica tasks among the nodes based upon the scale you set in the desired state.

For global services, the swarm runs one task for the service on every available node in the cluster.

A task carries a Docker container and the commands to run inside the container. It is the atomic scheduling unit of swarm. Manager nodes assign tasks to worker nodes according to the number of replicas set in the service scale. Once a task is assigned to a node, it cannot move to another node. It can only run on the assigned node or fail.

Load Balancing

The swarm manager uses ingress load balancing to expose the services you want to make available externally to the swarm. The swarm manager can automatically assign the service a PublishedPort or you can configure a PublishedPort for the service. You can specify any unused port. If you do not specify a port, the swarm manager assigns the service a port in the 30000-32767 range.

External components, such as cloud load balancers, can access the service on the PublishedPort of any node in the cluster whether or not the node is currently running the task for the service. All nodes in the swarm route ingress connections to a running task instance.

Swarm mode has an internal DNS component that automatically assigns each service in the swarm a DNS entry. The swarm manager uses internal load balancing to distribute requests among services within the cluster based upon the DNS name of the service.